Since Kubernetes is still relatively new, there’s often not a lot of expertise within organizations. That means in many organizations, there are a lot of questions about implementing, securing, and optimizing Kubernetes. Recently, we ran a webinar to discuss how to run K8s securely and efficiently, and we had some great questions that we answered at the end. Often, the questions we get in our webinars are ones that many others have as well, so we’re sharing the top five questions we had during our webinar here.

1) Are there any good tools for estimating or measuring reasonable resource limits in Kubernetes?

We actually have an open source project called Goldilocks that helps with that, which is available on GitHub. If you want to get your resource recommendations just right, Goldilocks monitors your actual application memory and CPU usage against what you’ve set your resource limits and requests to. Then it recommends something that's closer to what you're actually using. By using the Kubernetes vertical-pod-scaler (VPA) in recommendation mode, we’re able to see a suggestion for resource requests on each of our apps. Goldilocks creates a VPA for each deployment in a namespace and then queries them for information.

At Fairwinds, we also integrate Goldilocks into Fairwinds Insights. Our offering within Insights uses Prometheus as well to produce more fine-grained resource usage data, which is particularly helpful for spiky workloads. Goldilocks and other simpler solutions work well for workloads that have very consistent memory and CPU usage, but for workloads that are a bit less predictable, it’s helpful to have the very fine-grained data that Insights provides.

2) Is there a recommended distro to use for container images in terms of one that tends to have the fewest known CVEs?

This is a really good question. The general rule of thumb is the smaller the dish, the better. I wouldn’t recommend using Ubuntu, for example, as your base image. Alpine is generally the image that we go with internally. It has just enough in that image that you can get the most basic tasks done. It has a shell and there's a package manager to allow you to add on packages, such as curl or similar, if you need those inside your container.

The best thing to do, which we do whenever possible, is build your images from scratch. If you won't have any kind of base distribution, you'll need to add in everything that you might need inside that container. This can be a bit painful, because you'll need to layer on a lot of base packages. The advantage is that when you build from scratch, there really isn’t anything extra, so they are as pared down as you can build a Docker image. The less there is in the image, the fewer possible CVEs you could run into.

3) Are some of the security risks mentioned in the presentation pre-handled by a service provider or managed services?

The security issues that we talked about in the webinar are not handled by service providers like Google Kubernetes Engine (GKE).

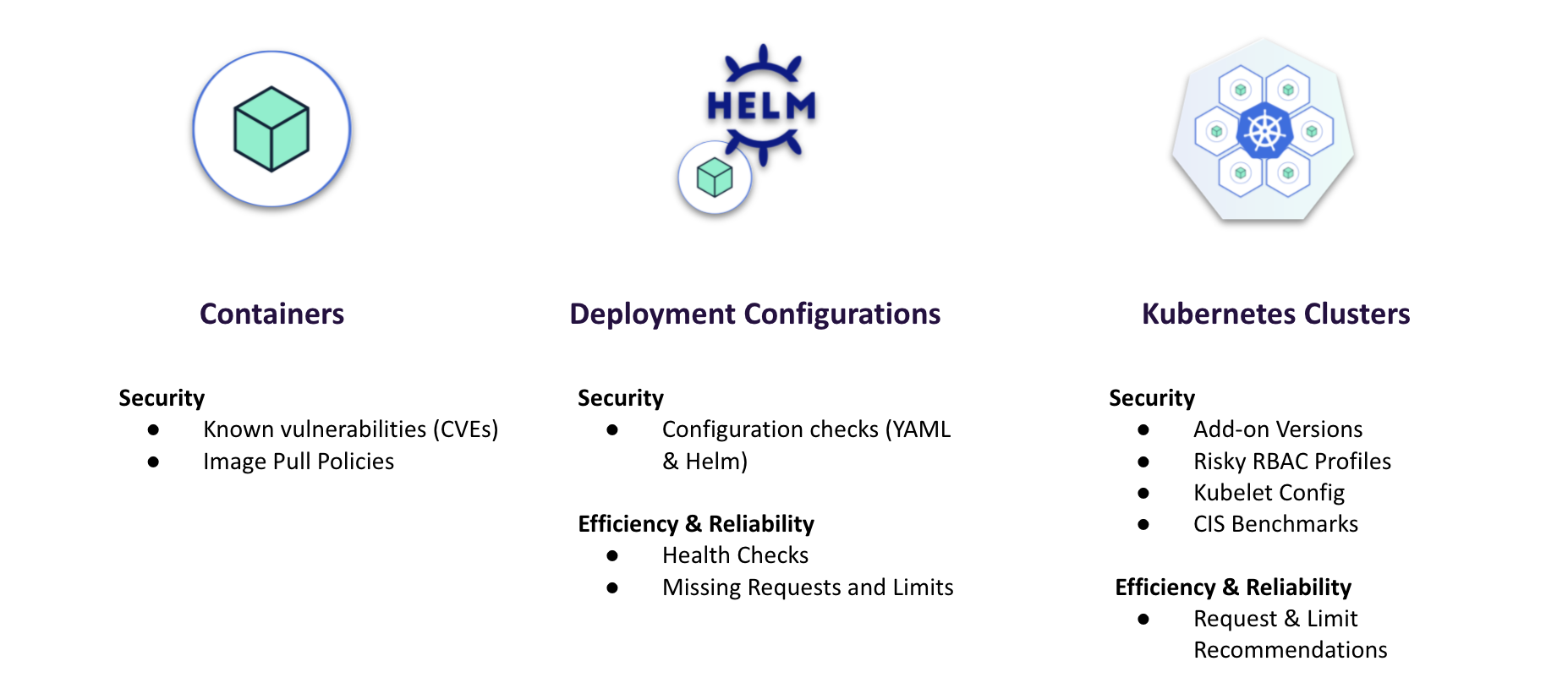

We mainly focused on these first two columns, container vulnerabilities and deployment configurations. GKE, Amazon Elastic Kubernetes Service (Amazon EKS), and Azure Kubernetes Service (AKS) can really help you with security risks in the right hand column. They take care of a lot of the configuration of the Kubernetes API itself, and can help with the base configuration of Kubernetes to make sure that you're doing things in a secure way. They have a lot of those security things built in by default, but they won't help you with a couple other things in that column, including risky role-based access control (RBAC) profiles. You're still responsible for RBAC when you're running on GKE, and you're still responsible for the add-ons, including NGINX Ingress and certificate management. So yes, they do cover some of the risks, but there's still a lot you need to do on top of what these providers give you out of the box.

4) Does Insights work with air-gapped clusters that are not accessible from the public internet?

Currently, we need some kind of allow list to allow the cluster to report back to Fairwinds Insights. We do have a self-hosted version that you can install on-premise. We could work with you to enable it to work inside of an air-gapped environment. Most of our customers are using Fairwinds Insights in the software as a service environment, but the self-hosted on-prem environment could help you meet some of those requirements.

5) Do you have an opinion on the TLS handoff at ingress controller versus MTLS using service mesh?

There's a trade-off there, and it's really between simplicity and security. If you put in a service mesh using MTLS, you're going to have an additional level of security and an additional level of control over which applications can talk to each other. The out-of-the-box configuration with Kubernetes allows every workload in the cluster to talk to every other workload, and having a service mesh in place lets you reduce that a little bit. It's also likely to cause headaches for your development teams because it will impact the ability to talk to the right services. They’ll have to configure those things.

It depends on your organization's appetite for security risk and on your organization's level of maturity. If you're just getting started with Kubernetes and you're a small scrappy startup, you're probably good with just a “vanilla” ingress. If your Kubernetes use is in an enterprise, I suggest going the service mesh route, especially if you have a multi-tenant cluster. When you have many teams that don't need to talk to each other going to the same cluster, getting a service mesh in there with MTLS is going to be helpful for you.

Running Kubernetes Securely and Efficiently

As the application development process shifts left, developers need support to make the right decisions for the organization in order to run Kubernetes securely and efficiently. That can be challenging if you lack internal expertise and you’re still coming up to speed with Kubernetes best practices. We have an overview of the webinar here, and hope these questions from our audience help answer some of the questions you’ve been wondering about. If you have other questions, please reach out!