A Step-by-Step Guide to Deploying Your First Kubernetes Cluster with Terraform and GKE

To ensure Kubernetes best practices for building infrastructure, Fairwinds uses common patterns that provide both consistency and customization. Terraform is our tool of choice to manage the entire lifecycle of infrastructure with infrastructure as code. You can read why in a previous blog.

This blog provides a step-by-step guide on how to get started with Terraform to build your first Kubernetes cluster in GKE.

Prerequisites to deploying your first Kubernetes cluster

If you want to follow along and create your own GKE Cluster in Terraform, follow these steps.

- Create Google Cloud Account and login

- Create a project in your Google Cloud Account Cloud Console

- There is generally a default project created, which you can use, or click on the

My First Projectdropdown next to the Google Cloud Platform logo and create a new project.

- There is generally a default project created, which you can use, or click on the

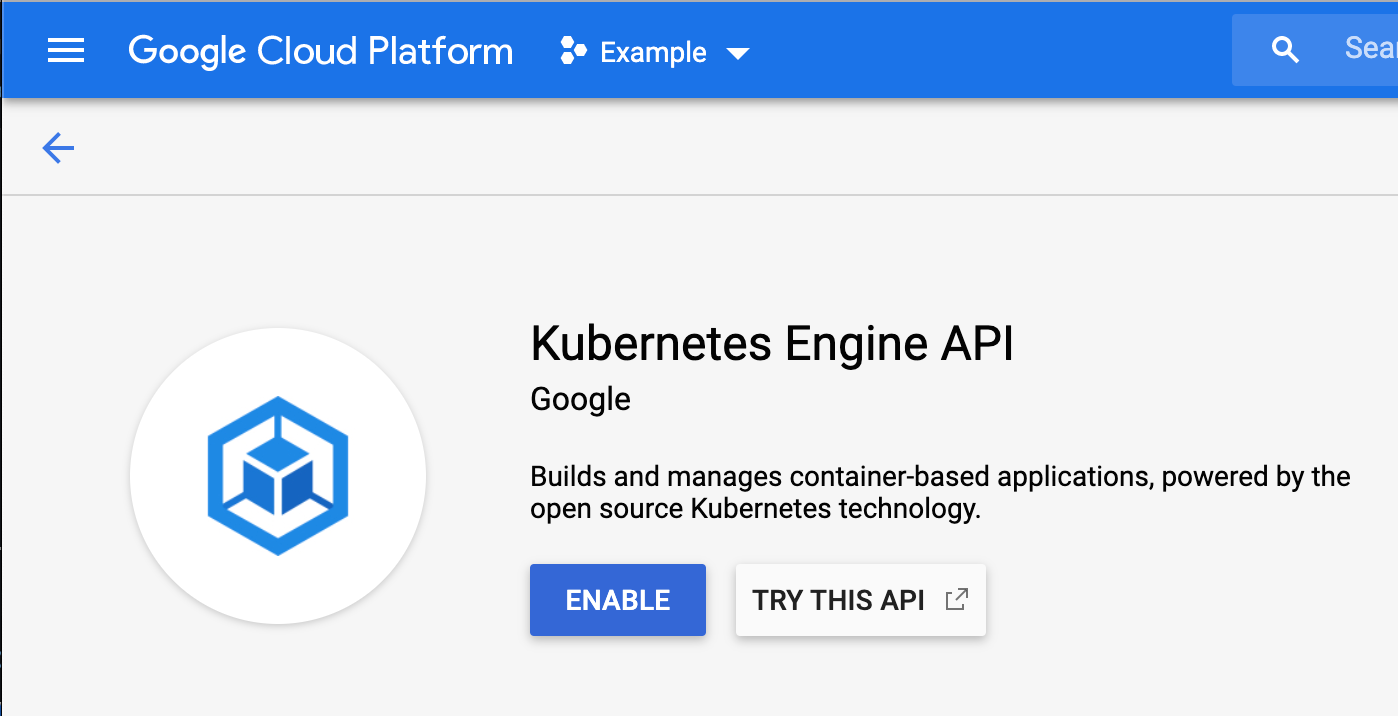

- Once the project is created and you have it selected in the dropdown, on the left hand side find

Kubernetes Engine → Configurationand enable the Kubernetes Engine API as shown below.

- Install the following

- terraform ( v 12 )

- gcloud cli ( make sure to gcloud login )

- kubectl ( v1.15.11 )

Steps to deploying your first Kubernetes cluster

In your terminal, create a project directory for your Terraform files, liketerraform-gke. The first file you create will be a file for the Google Terraform Provider, which lets Terraform know what types of resources it can create.

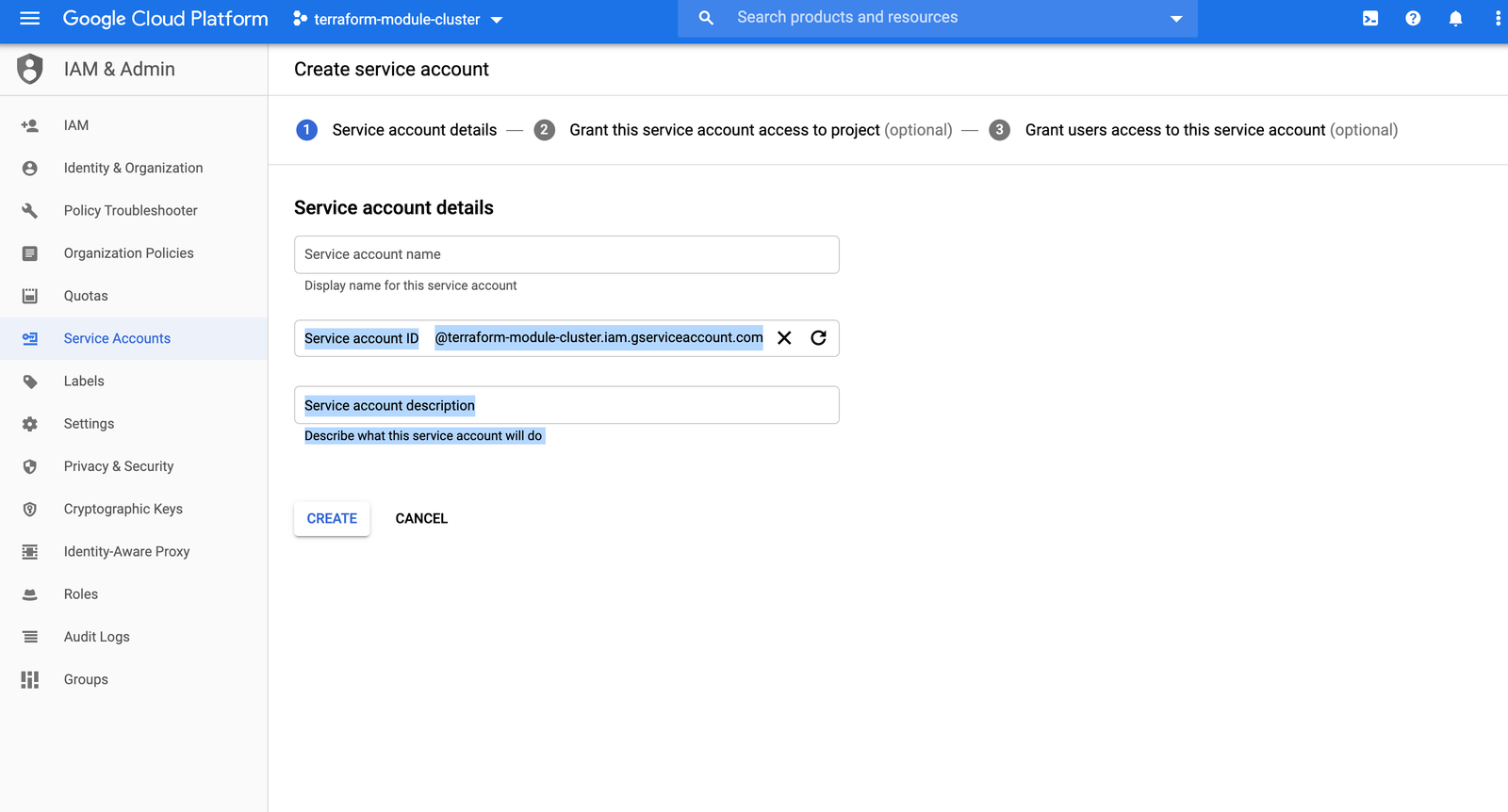

You will need a file with the credentials that Terraform needs to interact with the Google Cloud API to create the cluster and related networking components. Head to the IAM & Admin section of the Google Cloud Console’s navigation sidebar, and selectService Accounts.Once there, create a service account:

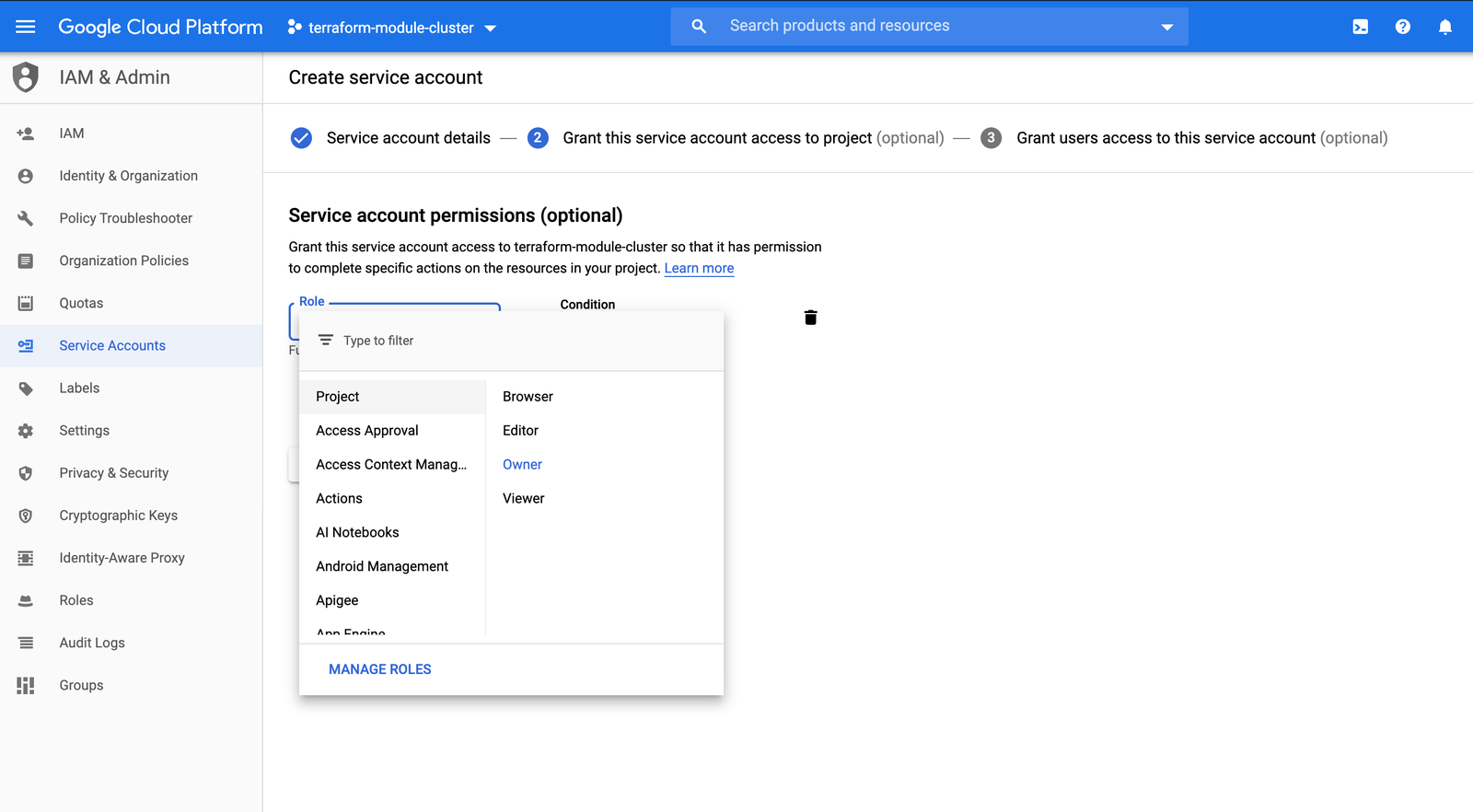

Once you have created the service account, you will be prompted to select a role for it. For the purposes of this exercise, you can selectProject: Ownerfrom the Role dropdown menu:

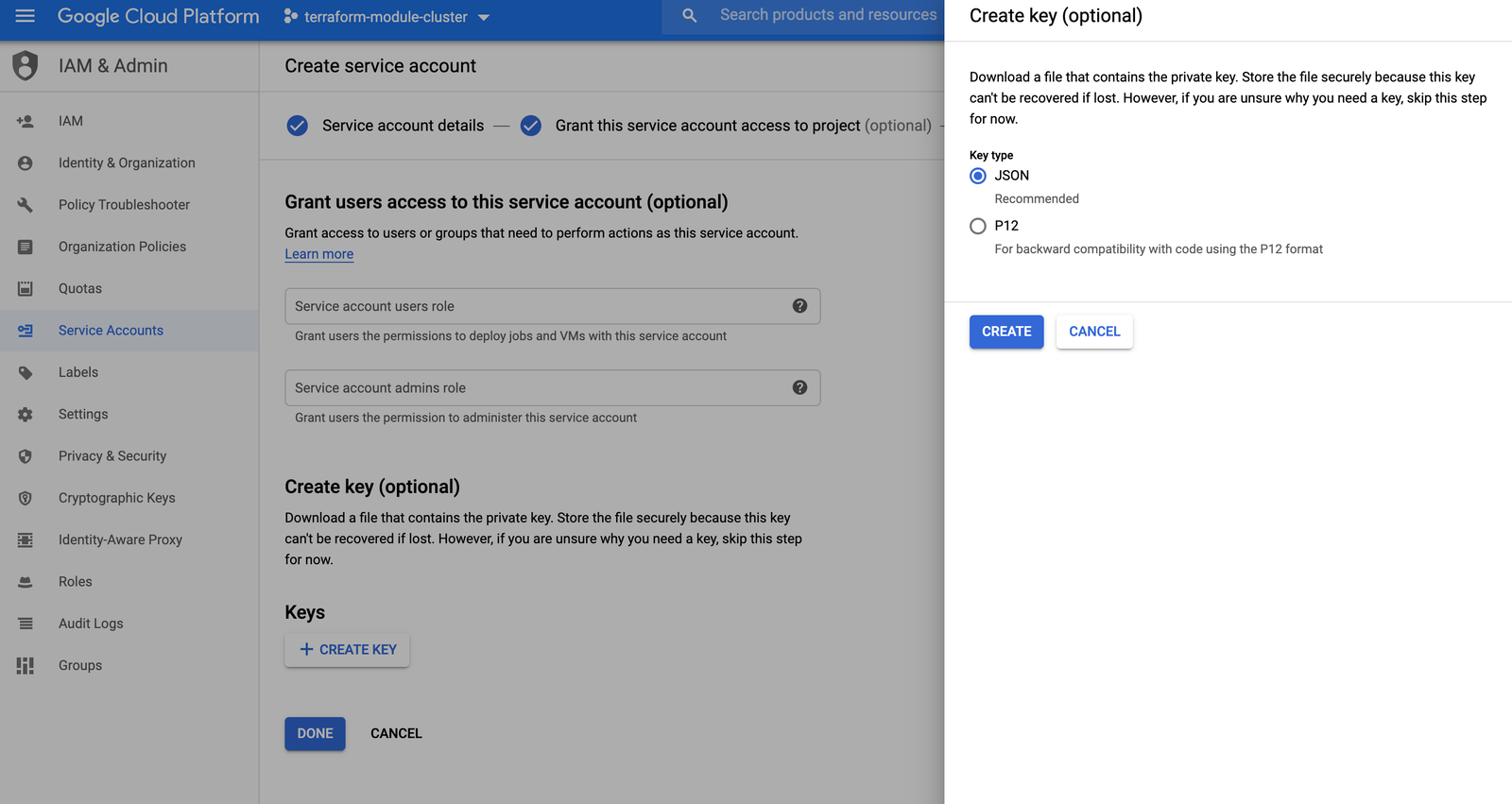

On the next page, click on CREATE KEY and select a JSON key type:

Once created, the file will be downloaded to your computer. Move the file to the Terraform project directory.

Next, create a file named provider.tf, and add these lines of code:

provider "google" {

credentials = file("./.json")

project = ""

region = "us-central1"

version = "~> 2.5.0"

}

Fill in the the project name with the ID of the project you created in the GCP Console, and fill in the credentials filename with the name of the service account key file that you just downloaded and moved to the project folder. Once that is complete, create a new file called cluster.tf. Add in this code to set up the network:

module "network" {

source = "git@github.com:FairwindsOps/terraform-gcp-vpc-native.git//default?ref=default-v2.1.0"

// base network parameters

network_name = "kube"

subnetwork_name = "kube-subnet"

region = "us-central1"

enable_flow_logs = "false"

// subnetwork primary and secondary CIDRS for IP aliasing

subnetwork_range = "10.40.0.0/16"

subnetwork_pods = "10.41.0.0/16"

subnetwork_services = "10.42.0.0/16"

}

You will notice in the source field that the network module is being pulled from our git repo: https://github.com/FairwindsOps/terraform-gcp-vpc-native/tree/master/default If you explore that repo, especially the main.tf file, you will see all the different resources and variables that the module takes care of creating, like a network and subnetwork, so you don’t have to create them individually. You might notice that the module can also be used to create a Cloud NAT with private nodes, but we’ll keep things simple here. Next, add this module code to the cluster.tf file for the cluster itself:

module "cluster" {

source = "git@github.com:FairwindsOps/terraform-gke.git//vpc-native?ref=vpc-native-v1.2.0"

region = "us-central1"

name = "gke-example"

project = "terraform-module-cluster"

network_name = "kube"

nodes_subnetwork_name = module.network.subnetwork

kubernetes_version = "1.16.10-gke.8"

pods_secondary_ip_range_name = module.network.gke_pods_1

services_secondary_ip_range_name = module.network.gke_services_1

}

You will notice that certain values, like module.network.network_name, are referenced from the network module. This feature of Terraform enables you to set values in on module or resource and using them in others. Lastly, add this code to cluster.tf to set up the node pool that will contain the Kubernetes worker nodes:

module "node_pool" {

source = "git@github.com:/FairwindsOps/terraform-gke//node_pool?ref=node-pool-v3.0.0"

name = "gke-example-node-pool"

region = module.cluster.region

gke_cluster_name = module.cluster.name

machine_type = "n1-standard-4"

min_node_count = "1"

max_node_count = "2"

kubernetes_version = module.cluster.kubernetes_version

}

That’s it! Your Terraform files are all ready to go. The next step is to initialize Terraform by running terraform init. Terraform will generate a directory named .terraform and download each module source declared in cluster.tf. Initialization will pull in any providers required by these modules, in this example it will download the google provider. If configured, Terraform will also configure the backend for storing the state file.

$ terraform init

Initializing modules...

Downloading git@github.com:FairwindsOps/terraform-gke.git?ref=vpc-native-v1.2.0 for cluster...

- cluster in .terraform/modules/cluster/vpc-native

Downloading git@github.com:FairwindsOps/terraform-gcp-vpc-native.git?ref=default-v2.1.0 for network...

- network in .terraform/modules/network/default

Downloading git@github.com:/FairwindsOps/terraform-gke?ref=node-pool-v3.0.0 for node_pool...

- node_pool in .terraform/modules/node_pool/node_pool

Initializing the backend...

Initializing provider plugins...

- Checking for available provider plugins...

- Downloading plugin for provider "google" (hashicorp/google) 2.20.3...

- Downloading plugin for provider "random" (hashicorp/random) 2.2.1...

The following providers do not have any version constraints in configuration,

so the latest version was installed.

To prevent automatic upgrades to new major versions that may contain breaking

changes, it is recommended to add version = "..." constraints to the

corresponding provider blocks in configuration, with the constraint strings

suggested below.

* provider.random: version = "~> 2.2"

Terraform has been successfully initialized!

After Terraform has been successfully initialized, you should be able to run terraform plan. It is always a good idea to run terraform plan and review the output before allowing Terraform to make any changes.

$ terraform plan

Refreshing Terraform state in-memory prior to plan...

The refreshed state will be used to calculate this plan, but will not be

persisted to local or remote state storage.

------------------------------------------------------------------------

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# module.cluster.google_container_cluster.cluster will be created

+ resource "google_container_cluster" "cluster" {

+ additional_zones = (known after apply)

....

}

# module.network.google_compute_network.network will be created

+ resource "google_compute_network" "network" {

+ auto_create_subnetworks = false

....

}

# module.network.google_compute_subnetwork.subnetwork will be created

+ resource "google_compute_subnetwork" "subnetwork" {

+ creation_timestamp = (known after apply)

....

}

# module.node_pool.google_container_node_pool.node_pool will be created

+ resource "google_container_node_pool" "node_pool" {

+ cluster = "gke-example"

....

}

# module.node_pool.random_id.entropy will be created

+ resource "random_id" "entropy" {

+ b64 = (known after apply)

....

}

Plan: 5 to add, 0 to change, 0 to destroy.

Please note that this snippet has been edited slightly to cut down on the size of this article. As shown in the example above, Terraform will take action to add our 5 GKE resources. When applied, Terraform will create our network, subnetwork (for pods and services), GKE cluster and node pool. The random_id resource comes from the node pool module; it is used to keep track of changes to the node pool resource. After the plan is validated, apply the changes by running terraform apply. For one last validation step, Terraform will output the plan again and prompt for confirmation before applying. This step will take around 10-15 minutes to complete.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

module.node_pool.random_id.entropy: Creating...

module.node_pool.random_id.entropy: Creation complete after 0s [id=dcY]

module.network.google_compute_network.network: Creating...

module.network.google_compute_network.network: Still creating... [10s elapsed]

module.network.google_compute_network.network: Creation complete after 17s [id=kube]

module.network.google_compute_subnetwork.subnetwork: Creating...

module.network.google_compute_subnetwork.subnetwork: Still creating... [10s elapsed]

...

module.network.google_compute_subnetwork.subnetwork: Creation complete after 37s [id=us-central1/kube-subnet]

module.cluster.google_container_cluster.cluster: Creating...

module.cluster.google_container_cluster.cluster: Still creating... [10s elapsed]

...

module.cluster.google_container_cluster.cluster: Still creating... [7m50s elapsed]

module.cluster.google_container_cluster.cluster: Creation complete after 7m56s [id=gke-example]

module.node_pool.google_container_node_pool.node_pool: Creating...

module.node_pool.google_container_node_pool.node_pool: Still creating... [10s elapsed]

module.node_pool.google_container_node_pool.node_pool: Still creating... [20s elapsed]

...

module.node_pool.google_container_node_pool.node_pool: Still creating... [2m0s elapsed]

module.node_pool.google_container_node_pool.node_pool: Creation complete after 2m7s [id=us-central1/gke-example/gke-example-node-pool-75c6]

Apply complete! Resources: 5 added, 0 changed, 0 destroyed.

Please note that this snippet has been edited slightly to cut down on the size of this article. Now that your cluster is provisioned, use gcloud to retrieve the cluster configuration for kubectl. This command will merge our new cluster configuration into your KUBECONFIG which defaults to ~/.kube/config.

$ gcloud container clusters get-credentials gke-example --region us-central1

Fetching cluster endpoint and auth data.

kubeconfig entry generated for gke-example.

Once the credentials are retrieved, confirm you can connect by running kubectl get nodes.

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

gke-gke-example-gke-example-node-pool-953475c5-lrm8 Ready 17m v1.16.10-gke.8

gke-gke-example-gke-example-node-pool-d9071150-m8mh Ready 17m v1.16.10-gke.8

gke-gke-example-gke-example-node-pool-df7578a5-htw9 Ready 17m v1.16.10-gke.8

Congratulations, you’ve successfully deployed a Kubernetes GKE cluster using Terraform! You can now begin deploying your applications to Kubernetes!